Understanding the Lifecycle of LLM Development: Comprehensive Insights

Introduction

Welcome to a comprehensive overview of the lifecycle of Large Language Models (LLMs). In the ever-evolving field of Artificial Intelligence, understanding the intricacies of LLM development is crucial for anyone looking to harness their full potential. This blog will guide you through the entire lifecycle of LLM development, based on a detailed 1-hour presentation. The presentation is divided into key segments, each exploring different facets of the development process, from initial architectural implementation to the nuanced stages of finetuning.

Using LLMs: Practical Applications

The journey begins with understanding the practical applications of LLMs. Large Language Models are versatile tools that can be used in a myriad of ways, including text generation, translation, and even as conversational agents. The introduction segment of the presentation provides a foundational understanding of how LLMs can be applied in real-world scenarios, setting the stage for more in-depth exploration.

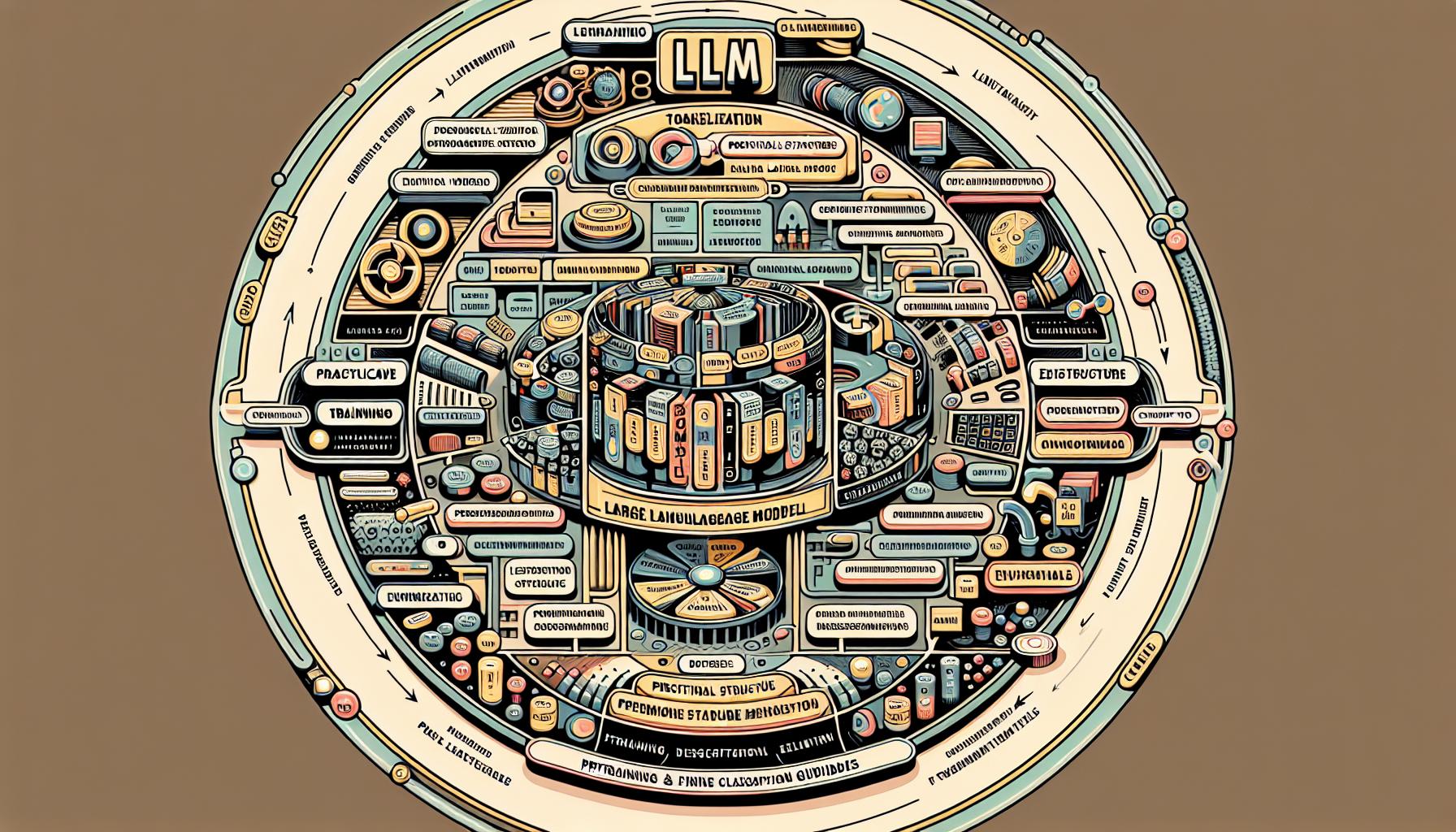

The Stages of Developing an LLM

Developing an LLM is a multi-stage process, each with its own set of challenges and considerations. The presentation highlights the following key stages:

- Dataset selection and preparation

- Tokenization

- Pretraining

- Finetuning

- Evaluation

These stages form the backbone of LLM development, ensuring that the model is both robust and versatile.

The Importance of Dataset Selection

One of the most critical factors in LLM development is the selection of datasets during the pretraining stage. A well-chosen dataset can significantly impact the performance of the model. Here are some key factors to consider:

- Relevance: The dataset should be relevant to the task at hand.

- Quality: High-quality datasets free from errors and biases lead to better performance.

- Diversity: A diverse dataset ensures that the model can generalize well across different contexts.

Understanding these factors helps in creating a strong foundation for the model, leading to better outcomes in subsequent stages.

Generating Coherent Multi-Word Outputs

Producing coherent multi-word outputs is a crucial aspect of LLM functionality. Techniques discussed in the presentation focus on ensuring that the models generate human-like, coherent text. This involves advanced algorithms and methodologies designed to handle the complexity of human language.

Tokenization: Breaking Down Text

Tokenization is the process of breaking down text into smaller units called tokens. This stage is vital for the model to understand and process the text effectively. The presentation delves into different methods of tokenization, highlighting their significance in the overall development process.

Pretraining Datasets and Their Impact

The datasets used during the pretraining stage play a crucial role in shaping the capabilities of the LLM. The presentation discusses the various types of pretraining datasets, emphasizing their impact on the model's performance. Quality, diversity, and relevance are key factors that determine the effectiveness of these datasets.

LLM Architecture: Internal Structure and Design

The architecture of an LLM is its internal structure and design. This segment of the presentation examines the various components that make up an LLM, including layers, attention mechanisms, and other architectural elements. Understanding these components helps in grasping how LLMs function and how different architectural choices can affect their performance.

Pretraining: The Extensive Initial Stage

Pretraining is an extensive initial stage where the model is exposed to vast amounts of data. This stage is crucial for the model to learn underlying patterns and structures in the language. The presentation provides an in-depth look at the pretraining process, methods used, and their significance in the overall lifecycle of LLM development.

Finetuning Methods: Classification, Instruction, and Preference

Finetuning is a critical stage that adapts the pre-trained model for specific tasks. The presentation covers three main finetuning methods:

- Classification Finetuning: This method adapts the model for classification tasks, enhancing its ability to categorize and label data accurately.

- Instruction Finetuning: This involves tuning the model to follow specific instructions, making it more adept at performing guided tasks.

- Preference Finetuning: This method customizes the model based on user preferences, enhancing its usability and user experience.

Each of these methods impacts the model's performance and usability in different ways, making them essential components of the LLM development lifecycle.

Evaluating LLMs: Methods and Challenges

Evaluation is a critical stage in the LLM development lifecycle. Various methods are used to assess the performance of LLMs, but each comes with its own set of challenges and limitations. The presentation provides an overview of these evaluation methods, discussing their pros and cons. It also highlights the primary challenges encountered, such as biases in evaluation metrics and the difficulty in measuring certain aspects of language understanding.

Practical Guidelines: Pretraining and Finetuning

The presentation concludes with practical guidelines for better pretraining and finetuning practices. These rules of thumb are designed to help practitioners navigate the complexities of LLM development effectively. They provide actionable insights that can lead to improved model performance and more efficient development processes.

Conclusion

This detailed exploration of the LLM development lifecycle offers valuable insights for both beginners and seasoned professionals. By understanding each stage and the key considerations involved, practitioners can better navigate the complexities of LLM development, leading to more effective and powerful models.

FAQs

What are the key factors to consider when selecting datasets for the pretraining stage?

Key factors include relevance, quality, and diversity of the dataset. These ensure that the model can perform effectively across different contexts.

How do different finetuning methods (classification, instruction, preference) specifically impact the performance and usability of LLMs?

Classification finetuning enhances the model's ability to categorize and label data accurately. Instruction finetuning makes the model more adept at following specific tasks, while preference finetuning enhances usability based on user preferences.

What are the primary challenges and limitations encountered in the evaluation of LLMs?

Challenges include biases in evaluation metrics, difficulty in measuring certain aspects of language understanding, and the limitations of current methods in capturing the full scope of the model's capabilities.

Happy viewing!