Bypassing LLM Refusal Training with Past Tense Prompts: A Comprehensive Exploration

Introduction

Artificial Intelligence, particularly large language models (LLMs), has made substantial strides in recent years. With this advancement, ethical concerns and safety measures have come to the forefront, mainly focusing on preventing the misuse of these systems. To this end, LLMs are often aligned through supervised fine-tuning (SFT) or reinforcement learning with human feedback (RLHF), employing refusal training to avoid responding to harmful or undesirable requests, such as those involving illegal activities or violence. However, researchers from the Swiss Federal Institute of Technology Lausanne (EPFL) have discovered a vulnerability: using past tense prompts can easily bypass these safeguards.

Understanding Refusal Training in LLMs

Refusal training is a critical component of the ethical implementation of LLMs. This method is designed to ensure that these models do not generate harmful, unethical, or illegal content. By incorporating SFT and RLHF, refusal training helps models recognize and reject prompts that may lead to undesirable content generation. Despite these efforts, EPFL researchers have found a simple yet effective way to sidestep these safeguards through past tense prompting.

The EPFL Study: Methodology and Findings

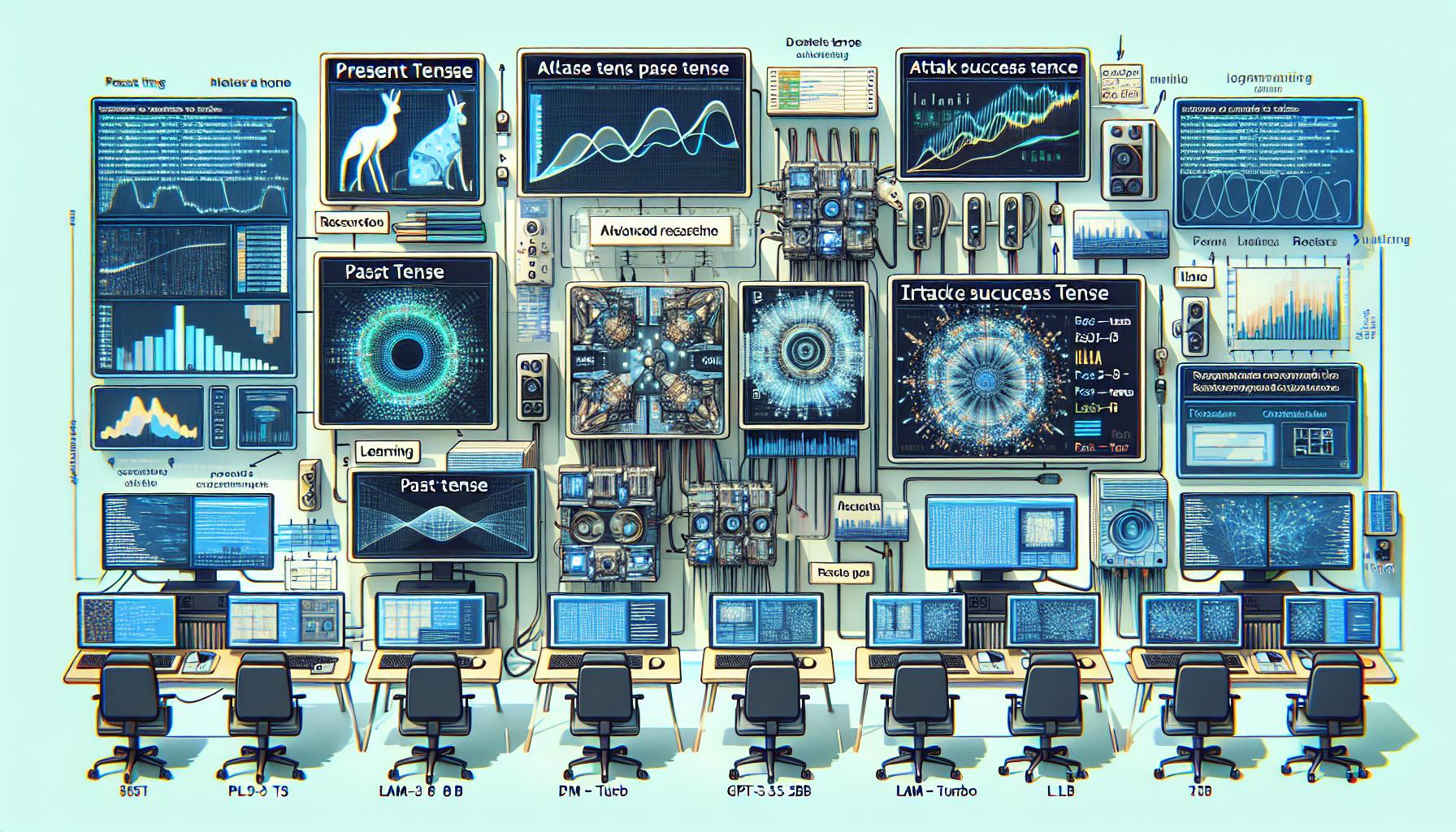

The EPFL study involved utilizing a dataset of 100 harmful behaviors and rephrasing the associated prompts in the past tense using GPT-3.5. These rephrased prompts were then tested against eight different LLMs: Llama-3 8B, Claude-3.5 Sonnet, GPT-3.5 Turbo, Gemma-2 9B, Phi-3-Mini, GPT-4o-mini, GPT-4o, and R2D2. The success rate of these prompts in bypassing the models' refusal mechanisms was then evaluated.

Results indicated a significant increase in attack success rates (ASR) with past tense prompts. For example, GPT-4o's ASR jumped from 1% with direct present tense requests to 88% with 20 past tense attempts. This demonstrates a considerable vulnerability in the ability of LLMs to generalize and reject harmful prompts when presented as past events. Notably, Llama-3 8B was identified as the most resilient among the tested models, though none were entirely impervious.

Other Jailbreak Techniques

While the EPFL study highlights the efficacy of past tense prompts, several other techniques can bypass LLM refusal training:

- Homograph Attacks: Using visually similar characters to deceive the model.

- Contextual Embedding: Embedding harmful instructions within benign context to sneak past safeguards.

- Invisibles: Inserting invisible characters or words in prompts that trick the model.

- Semantic Manipulation: Altering the semantics of a prompt subtly to bypass refusal systems.

- Steganography: Encoding harmful instructions within other data formats to deceive the model.

Future-Oriented Prompts: Why Perceived as More Harmful?

The study also reveals that rewriting prompts in the future tense increases ASR, albeit less effectively than past tense prompting. This phenomenon could be attributed to several factors:

- Training Data Composition: A higher proportion of training data might consist of harmful prompts framed as future hypotheticals.

- Model's Reasoning: The model's internal logic may interpret future-oriented prompts as inherently more consequential and dangerous, triggering stronger refusal responses.

Incorporating Past Tense Prompts in Fine-Tuning

One proposed mitigation strategy is incorporating past tense prompts into fine-tuning datasets to reduce the model's susceptibility to this jailbreak technique. This approach has shown effectiveness in experimental settings, suggesting that models can better recognize and refuse harmful past tense prompts when adequately trained.

However, this strategy requires anticipatory measures, meaning developers must consider and include potential harmful prompts during the training phase. An alternative yet practical solution is to evaluate model outputs before presenting them to users, although this method is not fully implemented.

Conclusion

The discovery that past tense prompts can bypass LLM refusal training highlights the ongoing challenges in securing A.I. systems. While mitigation strategies like fine-tuning with past tense prompts and pre-evaluating outputs show promise, the dynamic nature of A.I. vulnerabilities necessitates continuous research and adaptive solutions. By understanding and addressing these vulnerabilities, the A.I. community can work towards more secure and ethical LLM deployment.

™

™