Decoding the AI Mind: Insights from Anthropic Researchers on Demystifying Language Models

Introduction

Artificial Intelligence (AI) has made exponential advancements in recent years, with language models like Claude Sonnet standing as testaments to its capabilities. Despite their prowess, these A.I. systems are often termed 'black boxes' due to their opaque internal mechanics. The inability to fully grasp how these models process and generate responses has been a stumbling block, not only in the field of A.I. research but also in its practical applications. This blog delves into the groundbreaking work by Anthropic researchers who are pioneering efforts to decode these enigmatic systems. By employing innovative techniques, they aim to make A.I. more interpretable and safer for real-world applications.

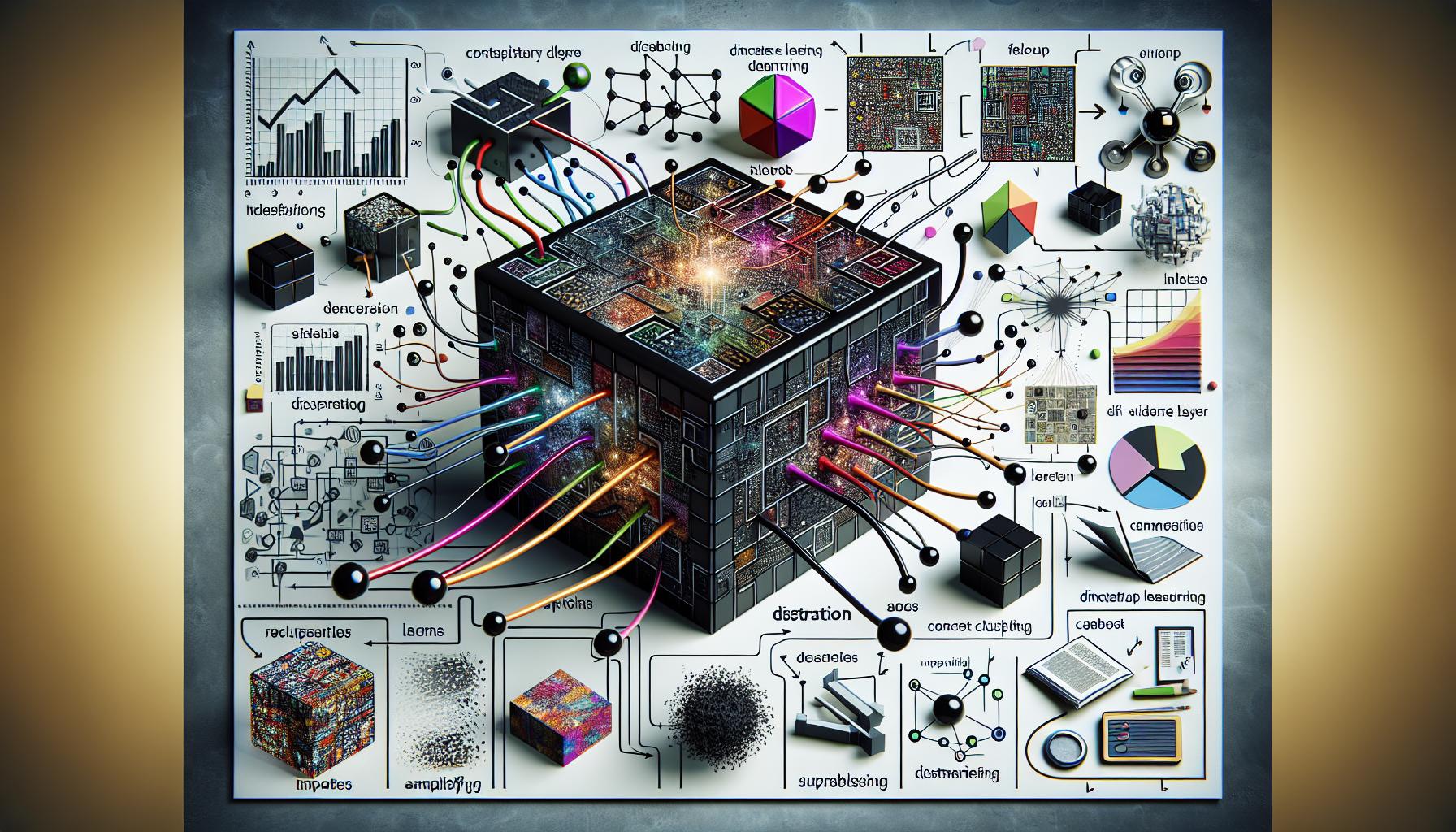

Understanding the 'Black Box'

AI models function by using neural networks that create internal representations during data training to map inputs to outputs. However, this process is highly complex, making it difficult to identify specific concepts from individual neuron activations. This lack of clarity in understanding the decision-making process of A.I. models has earned them the label of 'black boxes.'

Key Study Techniques and Findings

1. Dictionary Learning

Purpose: Dictionary learning decomposes complex A.I. patterns into linear building blocks, or 'atoms,' that are comprehensible to humans. It simplifies the complexity of A.I. systems by breaking them down into more manageable parts.

Application: The technique was initially trialed on a small 'toy' language model before being scaled to more intricate models like Claude 3 Sonnet. This approach has shown promising results in making the complex inner workings of A.I. more understandable.

2. Steps in the Study

Identifying Patterns

Anthropic researchers used dictionary learning to analyze neuron activations and identify common patterns representing higher-level concepts. This initial step is crucial for decoding the intricate functioning of A.I. models.

Extracting Middle Layer Features

Focusing on the middle layer of Claude 3.0 Sonnet—a critical component in the model's processing pipeline—researchers extracted millions of features. These features unveil the internal representations and learned concepts, offering a more detailed understanding of the model's operations.

Diverse and Abstract Concepts

The study discovered a wide range of concepts, spanning from concrete entities like cities to abstract notions. These features are multimodal and multilingual, highlighting the model's sophisticated representation capabilities across different languages and modalities.

3. Organization and Relationships of Concepts

Concept Clustering

Related concepts exhibited higher similarity in their activation patterns, indicating an internal organization that mirrors human intuitive relationships between concepts. This clustering is a significant step towards understanding how A.I. organizes and processes information internally.

4. Feature Verification

Feature Steering Experiments

Through feature steering experiments, researchers were able to amplify or suppress specific features and observe the direct impact on the model's output. This confirmed the influence of these features on the AI's behavior, making it a pivotal point in validating the study's findings.

Implications for A.I. Interpretability and Safety

Understanding how language models process and represent information is crucial for A.I. interpretability and, consequently, for safety. Transparent A.I. systems help mitigate risks by monitoring for dangerous behaviors, steering models towards desirable outcomes, and reducing bias. Anthropic's research is a significant step towards making A.I. models more explainable, shedding light on the underlying causes of biases or hallucinations. This transparency is becoming increasingly important as regulatory bodies, like the UK government and the EU, push for more stringent A.I. regulations.

Challenges in the Field

Despite the progress, reverse-engineering A.I. models remains an immense challenge. The resources required to fully map all features across all layers far exceed those needed to initially train the models. This complexity draws a parallel to large-scale scientific projects like the Human Brain Project, which also struggles with comprehensively mapping complex systems.

Answering the Key Questions

1. How Does the Dictionary Learning Technique Work?

Dictionary learning breaks down complex patterns within A.I. models into simpler, understandable building blocks called 'atoms.' By decomposing these intricate patterns, researchers can identify and analyze the higher-level concepts that these patterns represent. This process involves examining neuron activations and extracting features that correlate with specific concepts, thereby making the AI's decision-making process more transparent.

2. Real-World Implications of Feature Steering Experiments

Feature steering experiments have significant real-world implications. By understanding and controlling specific features within A.I. models, it is possible to modify the model's output behavior proactively. This can lead to more reliable A.I. systems in critical applications like medical diagnosis, autonomous driving, and financial forecasting. These experiments also pave the way for correcting biases within A.I. models, ensuring fair and equitable treatment across different demographic groups.

3. Influence on Future Regulations and Safety Standards

Advancements in A.I. interpretability, as demonstrated by Anthropic's research, are likely to influence future regulations and safety standards. A more profound understanding of A.I. mechanics will inform policymakers, helping them craft legislation that ensures A.I. systems are transparent, accountable, and safe. This aligns with the recent initiatives by the UK government and the EU's A.I. Act, which aim to regulate A.I. technologies rigorously.

Conclusion

Anthropic's strides in decoding the A.I. mind represent a monumental leap in understanding and shaping the future of A.I. technologies. By shedding light on the internal workings of language models, this research not only enhances interpretability but also lays the groundwork for safer and more transparent A.I. systems. As we move forward, these insights will be crucial in shaping the regulatory landscape and ensuring that A.I. technologies are developed and deployed responsibly.